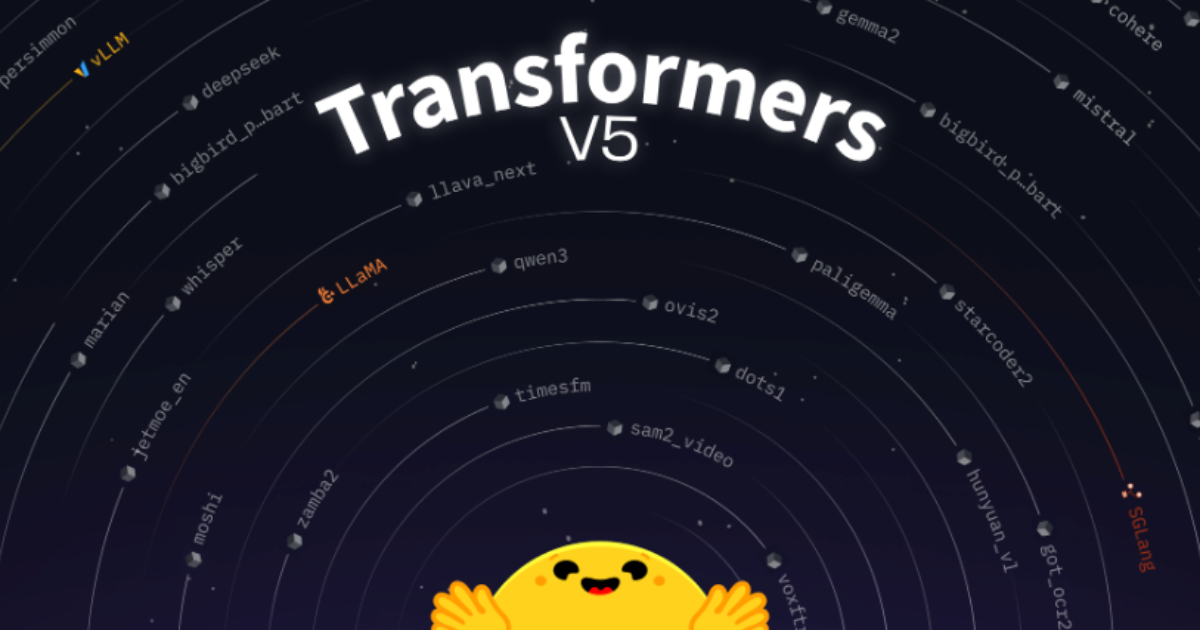

Transformers v5: Streamlining AI Development with Modularity and Interoperability

Alps Wang

Dec 17, 2025 · 1 views

Ecosystem Glue: The Future of Transformers

Transformers v5's emphasis on interoperability and simplification is a crucial step for the library's long-term sustainability. The move towards PyTorch as the primary framework and the streamlining of APIs for inference and deployment are positive developments. However, the article doesn't delve deeply into the technical challenges of the transition, such as potential backward compatibility issues or the specific performance improvements achieved. While the focus on being a solid reference backend is good, it could be argued that the library is still playing catch-up with specialized engines in some areas such as inference speed and memory optimization.

Key Points

- Transformers v5 introduces a modular architecture to reduce duplication and standardize components, improving maintainability.

- The library prioritizes PyTorch and focuses on compatibility with the JAX ecosystem.

- Enhancements for large-scale pretraining and streamlined inference APIs, including integration with 'transformers serve' and quantization support.

📖 Source: Transformers v5 Introduces a More Modular and Interoperable Core

Comments (0)

No comments yet. Be the first to comment!